Advanced Analysis: Technology, Safety, and Market Context

Navigating the Labyrinth of Safety and Regulation

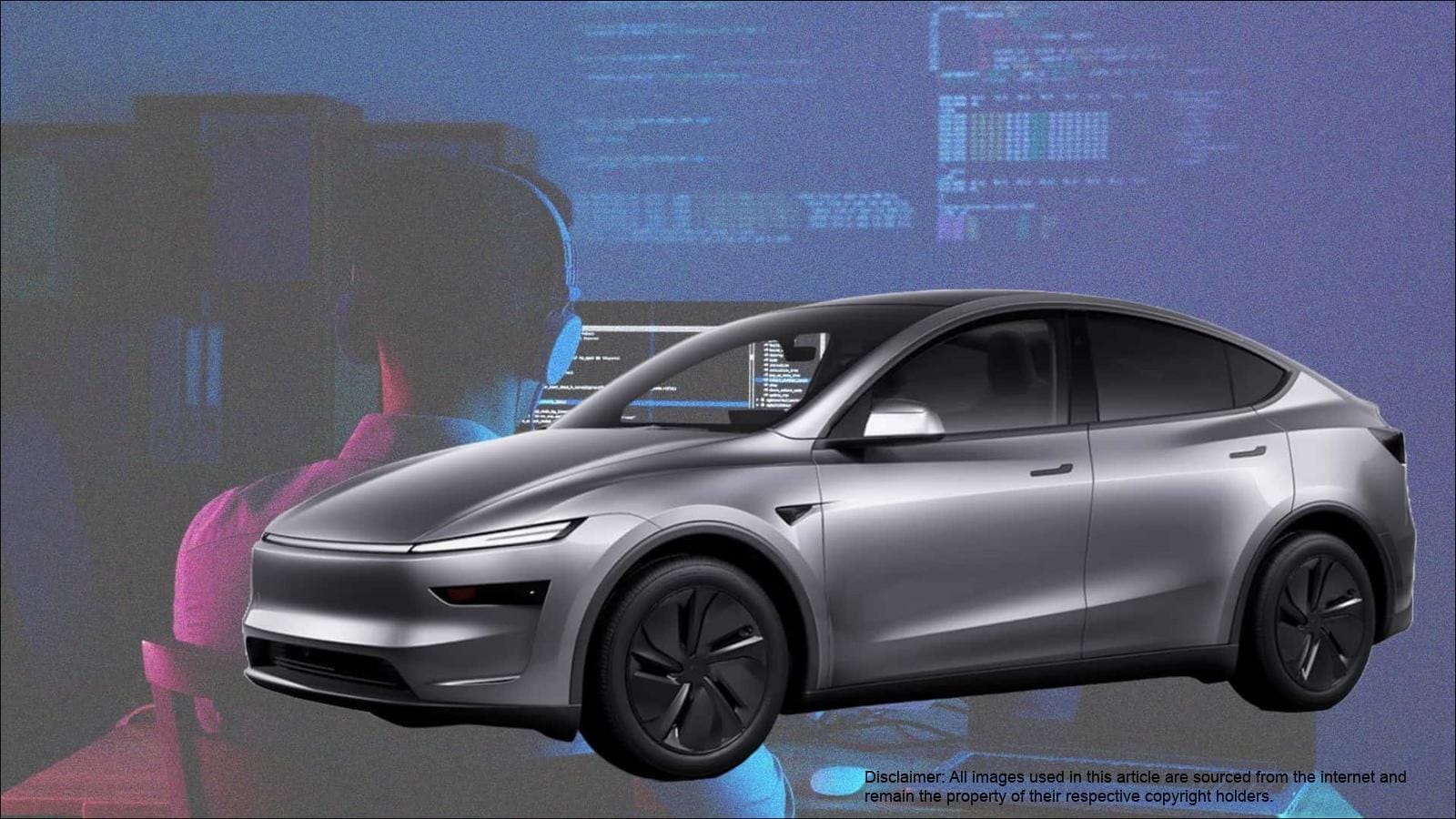

The journey towards autonomous driverless ride-hailing is fraught with challenges, chief among them being safety. For Tesla, this remains a critical area of focus and scrutiny. The company has yet to release comprehensive safety data for its Full Self-Driving (Supervised) software, a point of contention for critics and regulators. Furthermore, federal authorities are actively investigating numerous incidents involving Tesla’s Autopilot and FSD advanced driver assistance systems. These investigations cover hundreds of crashes, some of which have tragically resulted in fatalities. Despite these headwinds and years of developmental delays, Tesla, under Elon Musk‘s leadership, is confidently pushing forward with its Tesla robotaxi ambitions in Austin, Texas.

Tesla’s Tech Stack: Cameras, AI, and the Human Backstop

Elon Musk has consistently championed a camera-and-AI-centric approach to achieve full self-driving capabilities, famously dismissing the necessity of advanced sensors like lidar and radar, which are staples for competitors such as Waymo. Musk argues that an over-reliance on multiple sensor types can lead to conflicting data and confusion for the AI. “What we found is that when you have multiple sensors, they tend to get confused. So do you believe the camera or do you believe lidar?” he questioned. Ironically, Tesla’s latest strategy, which incorporates remote human operators, brings its operational model closer to Waymo’s, at least in its inclusion of a “human in the loop.” Tesla’s job postings suggest the development of a sophisticated virtual reality rig for these teleoperators, enabling them to monitor vehicle operations and intervene when necessary. Their role, however, extends beyond mere remote control; these operators will be instrumental in developing the human-AI interface, shaping how remote human intelligence and onboard AI collaborate effectively in real-time.

Learning from the Pack: Waymo’s Human-Assisted Model

The concept of human oversight in autonomous vehicle operations is not new. Waymo, a leader in the autonomous vehicle space, utilizes what it calls “fleet response agents.” These are human assistants who can be pinged by a vehicle when it encounters a complex or confusing traffic scenario. Waymo’s agents have access to real-time exterior camera feeds, can examine a 3D map of the vehicle’s surroundings, and even rewind sensor footage like a DVR to gain better context before providing guidance. “As with the rest of our operations, a helpful human is no more than a touch of a button away,” Waymo explained in a blog post. Tesla’s evolving setup for its Tesla robotaxi service appears to be adopting a similar philosophy: the vehicles will handle the driving autonomously, but when they encounter situations beyond their current capabilities, a remote human operator will be available to step in and assist. The success of this hybrid approach in Austin, Texas, will be closely watched in the coming weeks and months.

Autonomous Driving Approaches: Tesla vs. Waymo

| Feature | Tesla (Stated/Emerging Approach) | Waymo (Established Approach) |

|---|

| Primary Sensors | Cameras, AI-driven vision | Lidar, Radar, Cameras, AI |

| Elon Musk‘s Stance on Lidar | Dismisses as unnecessary, potential for sensor confusion | Considers essential for redundancy and robust perception |

| Human Intervention Role | Remote Teleoperators (monitoring & intervention) | Fleet Response Agents (remote assistance & guidance) |

| Initial Operational Autonomy | Aims for full autonomy, but launching with human oversight | Operates with remote human assistance for edge cases |

| Development Philosophy | Iterate rapidly with real-world data, camera-first AI | Structured testing, multi-sensor fusion, safety-first |